Essay by Eric Worrall

“… However, there are still numerous hurdles to overcome before the goal of automated debunking is achieved …”

Hierarchical machine learning models can identify stimuli of climate change misinformation on social media

Communications Earth & Environment volume 5, Article number: 436 (2024) Cite this article

Abstract

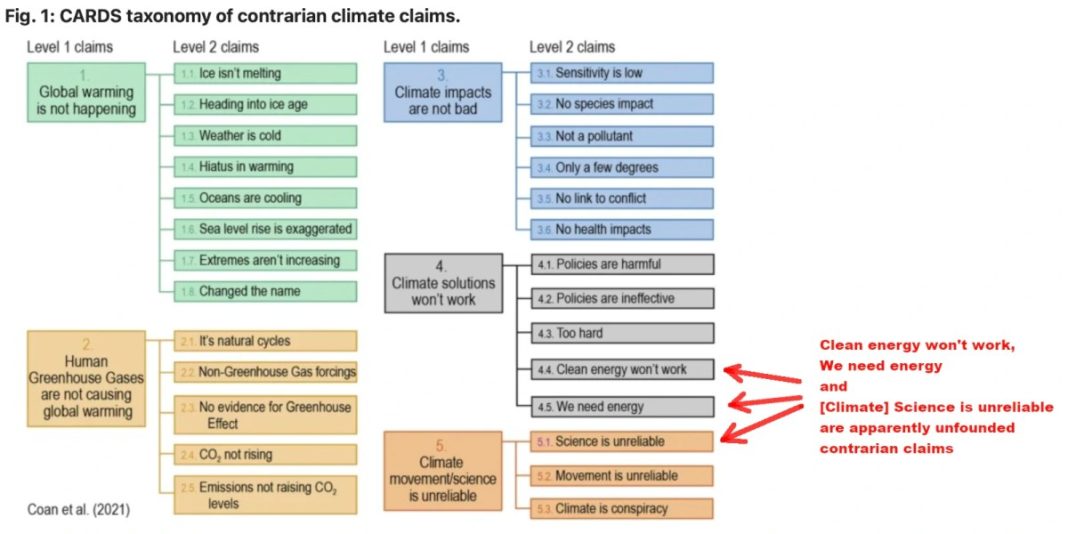

Misinformation about climate change poses a substantial threat to societal well-being, prompting the urgent need for effective mitigation strategies. However, the rapid proliferation of online misinformation on social media platforms outpaces the ability of fact-checkers to debunk false claims. Automated detection of climate change misinformation offers a promising solution. In this study, we address this gap by developing a two-step hierarchical model. The Augmented Computer Assisted Recognition of Denial and Skepticism (CARDS) model is specifically designed for categorising climate claims on Twitter. Furthermore, we apply the Augmented CARDS model to five million climate-themed tweets over a six-month period in 2022. We find that over half of contrarian climate claims on Twitter involve attacks on climate actors. Spikes in climate contrarianism coincide with one of four stimuli: political events, natural events, contrarian influencers, or convinced influencers. Implications for automated responses to climate misinformation are discussed.

Read more: https://www.nature.com/articles/s43247-024-01573-7

The section on the ultimate goal of the project is fascinating.

… These findings have practical implications. Adopting our model could help Twitter/X to augment and enhance ongoing manual fact-checking procedures by offering a computer-assisted procedure for finding the tweets most likely to contain climate misinformation. This adoption could make finding and responding to climate-related misinformation more efficient and help Twitter/X enforce policies to reduce false or misleading claims on the platform. Yet environmental groups have shown that Twitter/X ranks dead last among major social media platforms in its policies and procedures for responding to climate misinformation and there is little evidence that X will improve these procedures in the near term41. Alternatively, our model could provide the basis for an API that Twitter/X users could employ to assess climate-related claims they are seeing in their feeds. Overall, the potential practical applications of our model underscores the need for continued academic work to monitor misinformation on Twitter/X and raises important questions on the data needed to hold social media platforms accountable for the spread of false claims.

However, there are still numerous hurdles to overcome before the goal of automated debunking is achieved. An effective debunking requires both explanation of the relevant facts and exposing the misleading fallacies employed by the misinformation. Contrarian climate claims can contain a range of different fallacies, so automatic detection of logical fallacies is another necessary task that, used in concert with the CARDS model, could bring us closer to the ”holy grail of fact-checking”17. …

Read more: https://www.nature.com/articles/s43247-024-01573-7

Our old friend John Cook was involved with this paper. According to the paper he is now a researcher for the rather Orwellian sounding Melbourne Centre for Behaviour Change, which appears to be part of the University of Melbourne Psychology Department. The mission statement of the center is “Harnessing research and education to produce sustainable, durable changes in behaviours, policies, and practices that will enhance lives, livelihoods, and environments.“.

John Cook suffered a public embarrassment back in 2013, when his collection of creepy artwork became public knowledge, thanks to poor website hygiene. The artwork featured a self portrait of John Cook dressed in a NAZI uniform, and included a picture of prominent climate skeptics, including Anthony Watts, dressed as semi-naked Roman gladiators. But I’m sure this unusual art hobby in no way affects Cook’s work for the Centre for Behaviour Change’s mission to “produce sustainable, durable changes in behaviours“.

I couldn’t figure out who funded the AI paper, but given the close author affiliation with major Australian and British universities, I’m pretty confident tax dollars feature somewhere in the picture.

Related